Scoir is pretty similar to Naviance from what I understand, but the school sets what you can see. These two particular schools had plenty of data. There were other schools where there was less data, therefore less confidence. Again, there were several schools like this on the list, so chances of zero working out were pretty minimal. My point is that “chances” aren’t the same across the board and historical data can add something if used responsibly.

Our S’s school also uses Naviance and does allow one to see ED (has a circle around it) or EA (has a square around it) on the scattergram. not sure how reliable it is, since i see some EA squares around data points for schools that do not offer EA. they track the last 5 years of school data. it at least gives a good rough estimate of what GPA and test scores give the best probability of admissions but doesn’t have any context (hooks, race, ECs, etc.). given that our S’s school is a magnate regional school, the test scores tend all be way higher than even what the middle 50% is so it “appears” like you need a higher test score than average to get into a particular school which suggests they are comparing students to other students from the same school.

It seems nice to have an organized daughter. My son took it down to each deadline ![]()

Interestingly, we have some popular “likelies” where there actually are a few denials in the green zone on SCOIR, but they are so outweighed by the rest this is apparently not an issue (I also suspect the counselors know what happened in those cases and therefore know whether it is something for a given individual to worry about).

Yes. Chancing is somewhat school dependent. For example, our school sends a lot of kids to BC. Last year more than 40% of applicants were accepted. That is nowhere near the published rate. And even just looking at RD, acceptance rate was around 30%. BC is fairly local to us, they know our school and they get good yield - about 50% last year. So, for some students it is less of a reach than it would look like on paper. We’re a public school - not Catholic.

I might note by the time our kids are finalizing lists, I am not sure the distinction between an easier reach and a target really even matters any more. Hopefully it is more just a carefully considered plan involving each individual school.

Where I am pretty sure our counselors would get nervous is if a kid wanted to apply to no likelies by the strict definition.

Like, if you had two strict likelies, or one very likely, and then wanted to call some other college a likely too based on SCOIR–who cares, right?

But if you said based on SCOIR you were not going to apply to any strict likelies at all, then I suspect you would get some pushback.

That probably speaks more to your school’s population average SES. Only about 30% of their students get need based financial aid. I highly doubt that would be consistent school to school where there is a 12% acceptance rate.

Sure. That doesn’t change the fact that it’s relevant data that can be used to help someone form a list.

The first batch of PSATs is out and it feels like 760s (perfect score) in Math are a dime a dozen. I wonder if the same will happen to the SAT. If all of a sudden everyone is turning in really high scores, what will colleges make of it?

So far most colleges have said they will superscore across formats. Notably, Princeton said they won’t because it’s not the same test. Yale has an answer that I that I’d describe as vague/non-answer at best.

-

Amherst: Yes

-

Brown: Yes

-

Columbia: Yes

-

Cornell: Yes

-

Dartmouth: Yes

-

Duke: Yes

-

Emory: Yes

-

Georgia Tech: Yes

-

Harvard: Yes

-

Northwestern: Yes

-

Notre Dame: Yes

-

Princeton: No; “Applicants are welcome to submit scores from multiple SAT test dates, and across multiple testing formats, if they would like them considered within our holistic review of their application. However, given their distinct differences in format, we will not SuperScore sections of the digital SAT with sections of the (paper/traditional) SAT.”

-

University of Chicago: Yes

-

University of Florida system: Yes

-

University of Georgia: Yes

-

University of Michigan: Yes

-

University of Mississippi: Yes

-

UPenn: Yes

-

Vanderbilt: Yes

-

Yale: Does not superscore to create total score, but will consider highest section scores regardless of how attained; “All SAT scores are treated equally in our evaluation process.”

Of course, this is mostly relevant for the Class of 2025. Our strategy is to try and lock in a solid paper score and then see how digital goes. My thinking is that colleges “understand” the paper score and may be still figuring out how to interpret the digital score if results are inconsistent with the norm. The new test is undeniably easier and I think middle of the pack and lower will benefit the most. The content is in theory the same, but it’s only 2 hours long, there are no long passages with multiple questions and gone are those tricky historical passages.

Won’t they scale it to preserve percentiles?

Percentages are a good point, but at the elite school level maybe not granular enough? Also, as far as the DPSAT goes, the percentage they gave was “this is where your score falls against tests taken over the past 3 years.” It doesn’t tell us much about the new test format.

They tried, but historically the early rounds of a new test are not dialed in perfectly.

Ultimately if I was running a college, I would be trying to norm the percentiles. I am not sure how much information will be released on that, however.

I think that is what they will do eventually, they will figure out what the test tells them. I am wondering (out loud) what the new format will mean for the first class. Will there be bias towards one test or the other? Similarly to the TO situation, where I think it took a couple of years of kids going through the system for them to decide if they needed the information the test provides (which they appear to have come to the conclusion that yes, they need it for a more accurate assessment).

The first batch of PSATs is out and it feels like 760s (perfect score) in Math are a dime a dozen. I wonder if the same will happen to the SAT. If all of a sudden everyone is turning in really high scores, what will colleges make of it?

Compass Education Group, which does test prep, put out a statement on a mailing list I am on saying that there are far more high scores in the first batch of PSAT results than were expected. If this keeps up through other batches, NMSF qualification scores will jump up.

It’s bad if they bungled the PSAT. Hopefully the same will not be true of the SAT.

Yeah, I have been following his analysis, but I am thinking we are in new territory here and he might not be able to get estimated as accurately as in the past. The new scoring is even less transparent than the previous “curve” because two kids sitting next to each other will get different questions and in the report you only get a total score - no idea on how many questions you got wrong and questions are worth different amounts.

The pathetic thing is that there is absolutely no reason with digital testing to have so many students “top out” the test, as might happen with paper tests.

If the College Board actually went with a continuously adaptive test, they could have kept a set of problems in reserve that are expected to be too hard, but present them for those that are topping out the usual set of questions. And then afterwards you can norm the test to get the distribution you need.

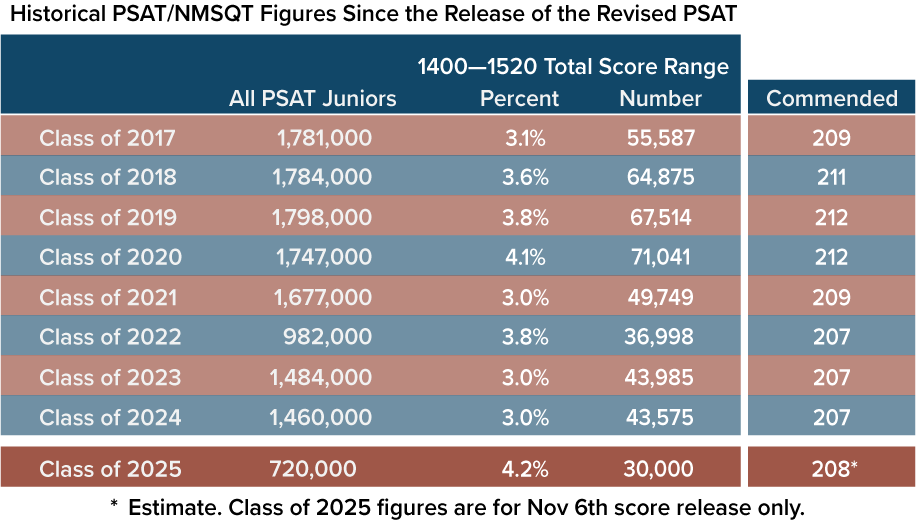

Is this statement available online? The data they published regarding the first batch of results suggest that while those scoring in the top band (1400-1520) is considerably higher than recent years (4.2 vs. 3.0), it is comparable to the class of 2020 (4.1) and not too far removed from 2019 and 2022 (3.9).

Those years the commended cutoff was 212, which is a significant jump from the 207 of late. That will likely mean wild swing in the NM cutoffs.

A key difference, IMO is participation. The numbers appear to be more in line with the recent years. When participation is low, it seems to skew towards stronger test takers (meaning weaker ones don’t bother) so 4.2% would be a significant increase from the 3% the “similar” 2023/24 groups achieved.

For reasons that are not entirely clear to me, however, the projected cutoff for commended for class of 2025 is 208, rather than 212… and in the same article, NMSF cutoffs for, say, California are not projected to spike upward too dramatically. This is all based on one score batch, of course. I am preoccupied with this as my junior did very well on this PSAT and I’m concerned about scores trending high. Maybe not as high as we suspect?